Using LLMs to extract structured civic data

Parental leave civic hacking pt. 2 (previously): or, using LLMs to extract civic structured data from unstructured content. I tooted a while ago about finding some fun usage of LLMs to extract US rep local office phone numbers where a purely heuristic method failed spectacularly. I finally wrapped this up: we can now extract and update local office numbers from every single house and senate website automatically with my new office-finder tool.

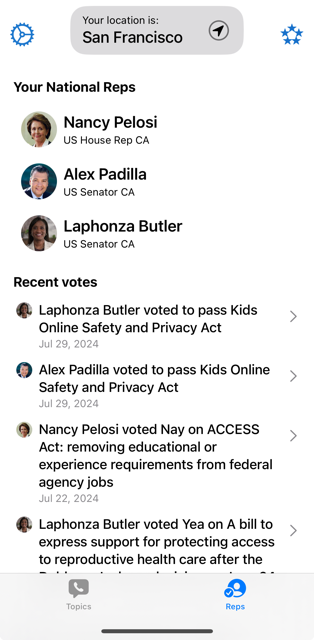

Why is this important? 5 Calls needs an accurate list of local office numbers for our users. For members of the Senate who have many millions of constituents or even higher profile House reps, outpourings of public comment frequently overwhelm the main DC phones and, frankly, sometimes they just turn off the phones entirely or send everything to voicemail. Local offices are far easier to reach a human only by virtue of being less of a public face for a rep.

But these numbers are pretty hard to keep up to date! Basically the only way it happens is if someone emails 5 Calls saying “I tried this number and it didn’t work, my rep’s website says something else.” So having a script anyone can run to ensure everything is up-to-date simplifies this work by a lot… to the tune of 783 new offices, 406 outdated offices, and including 162 reps who previously had no local offices listed previously.

We’re also contributing this back: we use the fantastic united-states/congress-legislators repo as a base for representative information so contributing up-to-date info back to that repo is a win for everyone using it. This tool supports generating a file with the format used in that repo.

using LLMs to build the LLM tool

After a bit of playing around with coding-assist language model tools in previous small projects, the loop finally clicked for me on this so I leaned in while working on this and it definitely made me more productive.

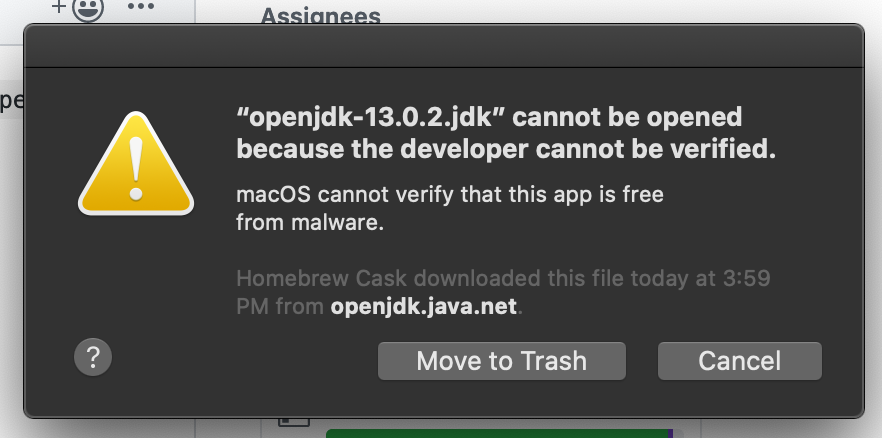

I used Claude 3.5 sonnet both via the web interface for one-off questions (“remind me the syntax for doing x”) as well as the claude dev plugin for vscode for codebase-specific development. Claude dev was the more game-changing integration as it can answer questions using the context of your current project and actually do the work for you. I found myself using it to flesh out the command line arguments I was building, transform data from one model type to another, and prototype basic logic that I just didn’t feel like thinking deeply about.

I would note that it almost never gives me 100% of what I want to accomplish unless the task is incredibly simple, but it provides an immediate base to work on the actual problems that I want to solve and not the tedious remember-how-this-works or write-100-lines-of-boilerplate steps.

A typical example was writing a function that I knew had a couple different edge cases and doing a first pass on the happy paths, then asking Claude to write up test cases for the function. Claude Dev can create files so adding a new test was just clicking a button. Then I review the test cases and add the failing edge cases that I know exist, maybe clean up some redundant test cases that claude added. Then I can go back and focus on handling the edge cases immediately, staying focused on the problem I’m already solving.

A little more on the office-finder tool and things I learned while building it:

We want to parse the data from all of these websites into something machine readable. We can ask for it from the LLM in JSON format and it’ll happily produce some json but that’s not always in the correct format even if we are specific about what parameters we want in the prompt.

We’re late enough to the LLM game that the big providers have already solved this particular problem by providing ways to ask for structured output that is not subject to the same kinds of hallucinations that LLM answers sometimes provide. OpenAI and Gemini refer to this as “structured output”, anthropic has a “JSON mode” and certainly others have similar concepts. The idea is to strongly type the shape and outputs you want and then at the very least the keys will be correct even if the values are sometimes wrong.

(worth noting that pure LLMs are fun but somewhat useless in this regard and the value is really coming from the pairing of pure language model and a certain level of guaranteed correctness. I would certainly like to read more about how pairing these two things is accomplished!)

For the most part, addresses returned by this tool are correctly formatted and represent exactly what’s on the page. There are quirks though, so the process does require some level of human observation. For example, even though I describe how to break out the address, suite and building name in the prompt, sometimes I get an address back that includes a building name in the address field, or “N/A” for the suite field even though I asked for it to be omitted if it doesn’t exist. It’s important to be able to re-run the process for specific sites if one run just goes sideways.

I cache the address data in a local json file so I can either manually fix offices (a small handful of rep websites are structured significantly differently than others, making finding the address text very difficult) or check for changes by rerunning and diffing that file for certain sites. Then another command automatically pulls the existing local office file from the united-states/congress-legislators repo and merges in any changes.

I have some thoughts on what might improve this, like splitting the address extraction from the structured data request, but I have not experimented on if that gives better results yet. Since we’ll need to refetch offices for new reps in the 119th Congress at the start of 2025, perhaps I’ll pick up some of these improvements for that cycle.